[Image via John Hugg]

Overview

This year’s New England Database Summit was held in the Stata Center at MIT. If you haven’t been there, it’s a pretty neat building, with some rather odd architecture. I’d say the conference attendees were 70% academic, primarily researchers and professors from MIT, Brown, the University of Wisconsin-Madison, a little bit of Northeastern and Harvard. The other 30% were businesses—I spotted people from Hadapt, Novartis, Endeca, IBM, VoltDB,and TokuTek. Facebook’s head MySQL guy, Mark Callaghan, was there to give one of the keynotes. Microsoft and EMC were the sponsors, and a bunch of lectures came from Dave DeWitt’s U-Wisconsin/Jim Gray Systems Lab crew . About half of the talks concerned NoSQL and Hadoop, while the other half were for traditional databases (mostly MySQL) with a smattering of hardware in between. Overall I found it enlightening to see what may be coming down the pipeline.

Keynote – Johannes Gehrke (Cornell) on Declarative Data Driven Coordination

The heart of this talk was a set of extensions to SQL that basically allows one to make an “entangled query.” An example entangled query might be “what are the set of classes I can take, which will all have one friend of mine in them.” As one’s set of classes depends upon others’ sets, the queries to determine the sets are said to be entangled. Other examples given were for MMO raid scheduling, wedding gift registries (“what are the set of gifts not yet purchased”), booking plane tickets with conditions (“What are the sets of flights that go from New York to Boston in the morning, on JetBlue, and I want to sit next to Larry Page”). The system was still trying to keep ACID, although due to not being able to really resolve anything until the other side makes a choice, it’s really eventually consistent. The flip side of these queries were entangled transactions. Rather than booking a specific flight or seat one might just book whatever is “best” in the set from an entangled query. One wouldn’t actually know what was booked until later. Guaranteed booking in case one gets an empty set was actually a piece of future work, which was a little surprising. It looks to me like this could be very interesting and helpful, but it still has some kinks that need to be worked out. External constraints are a hugely limiting factor, and multiple nested constraints (A will only sit with B, who won’t sit with C, who will only sit with A) make the entire thing very difficult to solve or it will fall apart in short order. At least one person asked about this in a roundabout way, and didn’t get a satisfactory answer.

Session 1 – Daniel Bruckner (MIT) on Curating Data at Scale: The Data Tamer System

This session was about a mostly automated process for cleaning up raw web data and putting it into proper columns for querying. I have a little bit of experience with this, and didn’t see anything that was particularly revolutionary. The case study used was goby.com, which uses this technique under the hood. Basically, web spiders using regular expressions can mass scrape sites to collect pieces of data, like the price of admission, where this is, what it’s called, when is it open, contact info, etc. This raw data can then be sorted and attached to a specific thing (with goby a thing is an event or place). Piece of technology I found rather neat was that they don’t necessarily preset the schema of what properties a thing has, instead adding them as new ones appear. It wasn’t clear to me that this was automated, but their UI for managing the data was pretty slick. The “mostly automated” part came up at the end of the talk, where it was revealed that 10 to 20% of the data needs to be manually filtered by an analyst, which isn’t surprising when dealing with messy data.

Session 2 – Willis Lang (U-Wisconsin) on Energy-Conscious Data Management Systems

This was a mostly academic talk. Basically, no one is really looking at the trade-offs between power and performance in software. It was shown that a certain set of techniques, like specific map/reduce tasks, certain searches, etc. might not be as performant but will reach a specific required performance while using less power. Much of the session was spent detailing the actual experiments done to show this. The power savings chosen for a rather arbitrary performance level came out to about 12%.

Session 3 – Jeong-Hyon Hwang (SUNY Albany) on G* – A Parallel System for Efficiently Managing Large Graphs

This was another academic talk, but the premise was rather interesting if it ever comes to fruition. G* is a graph API for managing graph data that runs efficiently on Hadoop. It is scheduled for release August 2012, and will be open source. There is not currently a way to test it, and its end performance and stability is unknown but for those with a need to analyze graph data, G* could prove very helpful.

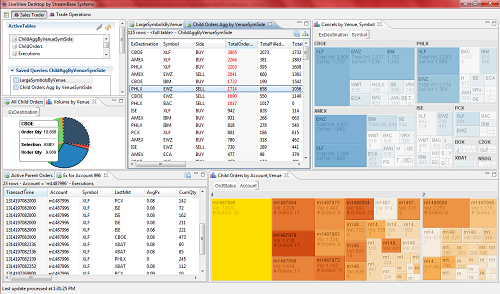

Session 3 – Richard Tibbetts (StreamBase) on StreamBase LiveView

This was a business/marketing session. LiveView is a pretty compelling product. They are capable of continuously streaming live data (financial feeds, mostly) into constantly updated views that have been designed by user analysts. As changes happen in the streaming data, the actual data view and results a user sees are changed before their eyes. Currently, LiveView is in production with a few unnamed large financial firms. Some random performance targets given out were that they can handle 50k updates/sec, with 100ms propagation time to the end user. I specifically asked if this was a target or if they were actually meeting these numbers, and they claimed to be “destroying them.” Future work includes dealing with messier data, more events and alerting for data changes, and incorporating more pre-built analytics (possibly from other organizations).

Session 5 – David Karger (MIT) on Documents with Databases Inside Them

This was a purely academic talk with a neat premise. Dido consists of an entire data viewer application with attached data, and a WYSIWYG editor for the data viewer, which are appended to an html document. Essentially, this turns the document into a web application, but it doesn’t need any sort of network connection as all the functionality is captured locally in the document. If a user wanted to make a new application, all he/she needs to do is copy the file, and possibly change the underlying data set. This came with an argument against cloud computing – instead of running an application on the cloud, just make every document include a lightweight, usable application that can be edited and created locally. One caveat was that applications with massive underlying data sets, even if much of these were never viewed (eg Google Maps), couldn’t possibly be stored locally, and in these big data cases they’d have to be fetched from the cloud. A call to action was made to make httpd for SQL and Hadoop so that accessing data sources is as easy as making an html page.

Keynote 2 – Mark Callaghan (Facebook) on Why Performance is Overrated

Facebook still maintains an absolutely massive number of MySQL instances, and Callaghan is one of Facebook’s ten (!) operations guys who basically keep the damn thing running. The primary point of the talk was to say that at Facebook, they don’t care about peak performance, but instead care about average/constant performance. The rest of the lecture was devoted to how they do everything in their power to avoid stalls in processing. Much of what was presented was very specific to Facebook’s needs. Their current git log for mysql has 452 changes, most of which are being kept internal to the company, but they do occasionally submit patches for MySQL itself. Since the Oracle acquisition this process has become slower. Towards the end of the talk, the Callaghan mentioned that they do have a need to transfer MySQL data into Hadoop, but they are still doing batch transactions to do it because none of the other technology really works.

Session 6 – Daniel Abadi (Hadapt) on Turning Hadoop into an All-Purpose Data Processing Platform

First: Daniel Abadi is one of the fastest speakers I’ve ever heard, while still be understandable. He must have condensed a one hour lecture into 20 minutes. The lecture consisted of a general overview of Hadapt’s datastore system. Basically, Hadapt feels that having a traditional DBMS next to a Hadoop instance is silly architecture, and are trying to bring some of the DBMS-like features into Hadoop. They aren’t actually sure yet what the best way to store the data is. Abadi had a few hecklers towards the end, as Hadapt has apparently been flip-flopping on this issue. (I believe right now they are using a hacked up version of PostgreSQL.)

Session 7 – Mohamed Y. Eltabahk (WPI) on CoHadoop: Flexible Data Placement and Its Exploitation in Hadoop

This was an academic talk. CoHadoop allows for the creation of localization attributes for files which take better advantage of rack awareness and data placement for more efficient map reduce tasks on Hadoop. There are some kinks in it, but it looks pretty solid and will probably eventually find its way into mainline Hadoop in some form.

Session 8 – Andy Pavlo (Brown) on Making Fast Databases Faster

This was an interesting/hilarious lecture. Pavlo started behind a door with a bodyguard in front of it. The bodyguard was the full suit, sunglasses, and ear communicator type. He opened the door and escorted Pavlo to the podium, where he remained standing guard during the entire presentation (as well as threatening anyone who asked a question). The point of this schtick was that every time Pavlo’s advisor, Stan Zdonik, asked him to fix a specific problem with H-Store (the academic version of VoltDB), Pavlo would instead fix something that made the occurance of said problem less likely, without ever fixing the actual problem. With each fix, Zdonik became more irate until he “beat him senseless with a 2×4.” Poor Pavlo had to take a restraining order out against Zdonik, hence the bodyguard. Anyways. The actual presentation focused on three optimizations that I believe have all made their way into H-Store at this point, namely better distributing partitions such that distributed transactions are less likely to occur. One of the neat aspects of doing this is they are using machine learning to determine whether or not a transaction is distributed, and how long that may take, to better use other nodes that might be waiting for that transaction to finish. This is all available on github.

Session 9 – Alvin Cheung (MIT) on Automatic Partitioning of Database Applications

The idea here is that a developer can not always see ahead of time when something should be performed by a client application or when it should be run as a stored procedure on a database. Combined with the source code and some profiling information, the authors created a tool that will basically spit out two separate programs – one to run as the application itself, and a second program containing all of the stored procedures to be called. There is some amount of heap and object transfer logic that is generated as well. It was unclear to me how much profiling information was necessary – it wasn’t all automatically captured, and I could imagine that for significantly complex systems determining such information would be difficult.

Session 10 – Jaeyoung Do (U-Wisconsin) on Racing to the Peak: Fast Restart for SSD Buffer Pool Extension

This was a purely academic talk. The authors devised an alternate scheme that doesn’t sacrifice performance compared to other schemes while making SSD’s reach their peak performance rates faster through buffer pool shenanigans. Basically they lazily write to disk instead of forcing copies to disk, and have a log they can use to replay events in case of disk failure.

Session 13 – Yandong Mao (MIT) on Cache Craftiness for Fast Multicore Key-Value Storage

This was pretty gimmicky, but interesting nonetheless. Robert Morris and a few of his students created a single core key value store called MassTree (Massachusetts + Tree) that achieves “kick ass performance”: 5 million inserts per second and 7 million gets per second. The highlight had to be when these performance numbers were compared to VoltDB – the MassTree had a giant bar next to the tiny sliver of VoltDB. Of course, the slide was a joke. These performance numbers will pretty much plummet the second any sort of multi-core or IO scenarios begin, as it relied on keeping the entire data store in memory. Some of the techniques may be applicable to lower layers of other databases, however.

Session 14 – Ross Shaull (Brandeis) on Retro: Modular and Efficient Retrospection in a Database

This was an academic talk that has some amount of administrative use, as well. The author devised a method of adding snapshots to any database which does not currently possess them by using components common to any modern database. It does require an additional set of servers for managing and storing the snapshots, but adding these features through the Retro method to a specific database (I think it was PostgreSQL) only took about 200 lines of modification on the database source (plus all the retro specific code).

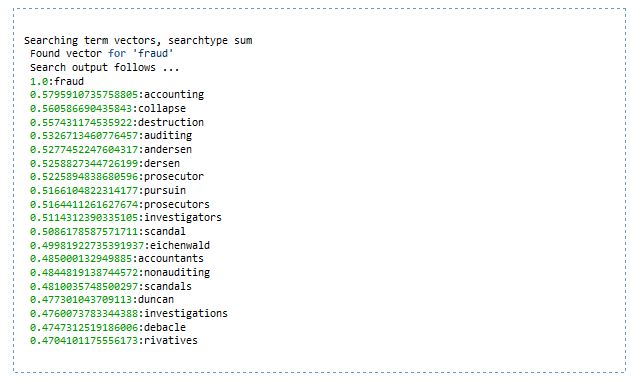

Apache Mahout

Apache Mahout