MAHOUT_LOCAL is not set; adding HADOOP_CONF_DIR to classpath.

Running on hadoop, using HADOOP_HOME=/usr/lib/hadoop-0.20

HADOOP_CONF_DIR=/usr/lib/hadoop-0.20/conf

MAHOUT-JOB: /data/mahout-distribution-0.5/examples/target/mahout-examples-0.6-SNAPSHOT-job.jar

Topic 0

===========

i [p(i|topic_0) = 0.023824791149925677

information [p(information|topic_0) = 0.004141992353710214

i'm [p(i'm|topic_0) = 0.0012614859683494856

i'll [p(i'll|topic_0) = 7.433430267661564E-4

i've [p(i've|topic_0) = 4.22765928967555E-4

Topic 1

===========

you [p(you|topic_1) = 0.013807669181244436

you're [p(you're|topic_1) = 3.431068629183266E-4

you'll [p(you'll|topic_1) = 1.0412948245383297E-4

you'd [p(you'd|topic_1) = 8.39664771688153E-5

you'all [p(you'all|topic_1) = 1.5437174634592594E-6

Topic 2

===========

you [p(you|topic_2) = 0.03938587430317399

we [p(we|topic_2) = 0.010675333661142919

your [p(your|topic_2) = 0.0038312042763726448

meeting [p(meeting|topic_2) = 0.002407369369715602

message [p(message|topic_2) = 0.0018055376982080878

Topic 3

===========

you [p(you|topic_3) = 0.036593494258252174

your [p(your|topic_3) = 0.003970284840960353

i'm [p(i'm|topic_3) = 0.0013595988902916712

i'll [p(i'll|topic_3) = 5.879175074800994E-4

i've [p(i've|topic_3) = 3.9887853536102604E-4

Topic 4

===========

i [p(i|topic_4) = 0.027838628233581693

john [p(john|topic_4) = 0.002320786569676983

jones [p(jones|topic_4) = 6.79365597839018E-4

jpg [p(jpg|topic_4) = 1.5296038761774956E-4

johnson [p(johnson|topic_4) = 9.771211326361852E-5

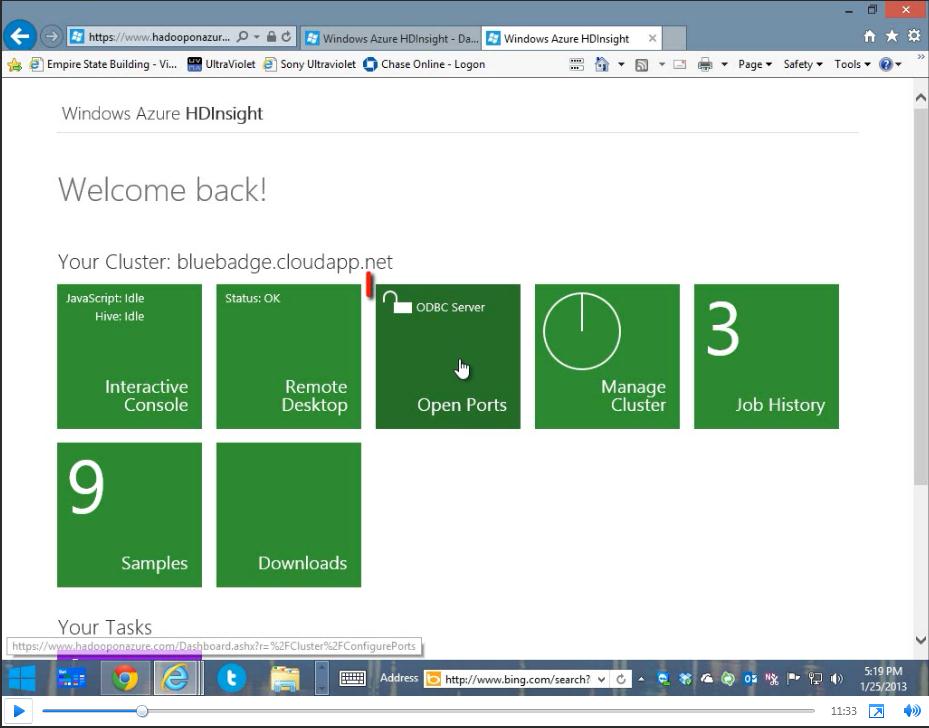

We partnered with Andrew Brust from Blue Badge Insights to integrate OfficeWriter with Hadoop and Big Data. Taking existing OfficeWriter sample projects, Andrew discusses how he created two demos showing OfficeWriter’s capabilities to work with Big Data. One demo uses C#-based MapReduce code to perform text-mining of Word docs. The other demo focuses on connecting to Hadoop through Hive.

We partnered with Andrew Brust from Blue Badge Insights to integrate OfficeWriter with Hadoop and Big Data. Taking existing OfficeWriter sample projects, Andrew discusses how he created two demos showing OfficeWriter’s capabilities to work with Big Data. One demo uses C#-based MapReduce code to perform text-mining of Word docs. The other demo focuses on connecting to Hadoop through Hive.

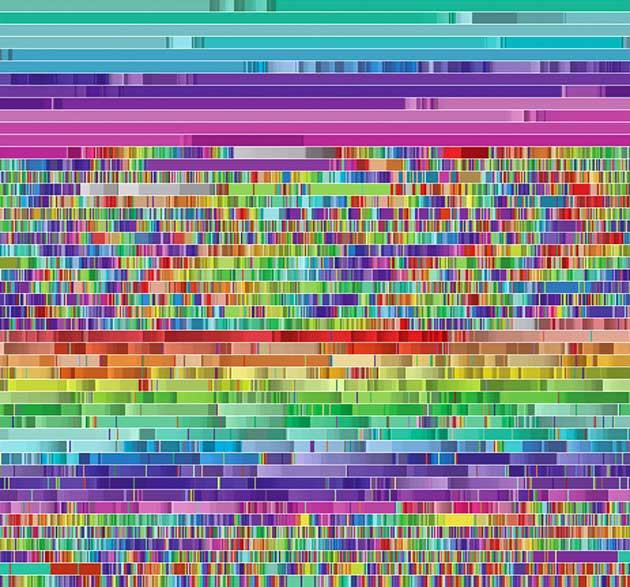

Apache Mahout

Apache Mahout

Big data is a big deal right now, and it’s only going to become a bigger deal in the future, so it makes sense to learn about as many of its aspects as you can, as quickly as you can. Or pick one and learn it very well. Or don’t pick any, if you are a staunch believer in the shelf-life of traditional data warehouses. From a machine learning deep-dive to an open-source buffet, the following five conferences provide educational and networking opportunities for both the specialists and renaissance persons among you. Attending a cool one I’ve missed? Let me know in the comments!

Big data is a big deal right now, and it’s only going to become a bigger deal in the future, so it makes sense to learn about as many of its aspects as you can, as quickly as you can. Or pick one and learn it very well. Or don’t pick any, if you are a staunch believer in the shelf-life of traditional data warehouses. From a machine learning deep-dive to an open-source buffet, the following five conferences provide educational and networking opportunities for both the specialists and renaissance persons among you. Attending a cool one I’ve missed? Let me know in the comments!