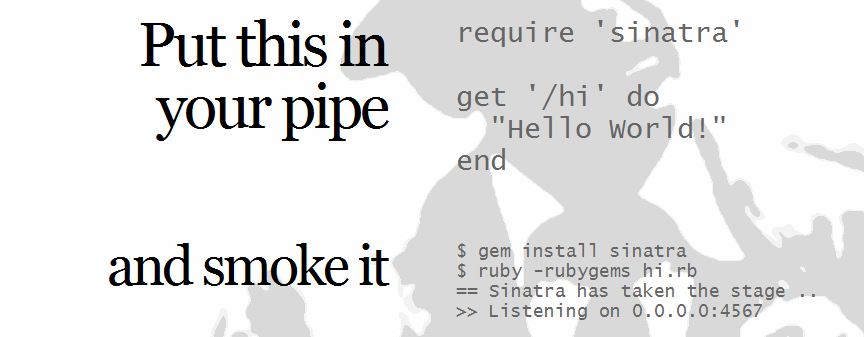

I recently had to install Sinatra on bluehost. It proved troublesome so I’m documenting what I did. One curious handicap I had is I could not ssh into bluehost due to silly administrative reasons. Here’s what I did:

Install the needed RubyGems

First, from cPanel, I went into RubyGems (under Software/Services) and I installed the following packages:

- sinatra (version 1.3.2)

- tilt (version 1.3.3)

- rack (version 1.4.1)

- fcgi (version 0.8.8)

You likely already have some of these so be sure to check the list first.

Install the “.htaccess” file

From the cPanel, I went to FileManager (under Files) and chose to browse the web root (Note: make sure you check “Show hidden files”). In public_html, I put in a new file called “.htaccess” and put the following fluff inside of it:

# General Apache options

AddHandler fcgid-script .fcgi

AddHandler cgi-script .cgi

#Options +FollowSymLinks +ExecCGI

# If you don't want Rails to look in certain directories,

# use the following rewrite rules so that Apache won't rewrite certain requests

#

# Example:

# RewriteCond %{REQUEST_URI} ^/notrails.*

# RewriteRule .* - [L]

# Redirect all requests not available on the filesystem to Rails

# By default the cgi dispatcher is used which is very slow

#

# For better performance replace the dispatcher with the fastcgi one

#

# Example:

# RewriteRule ^(.*)$ dispatch.fcgi [QSA,L]

RewriteEngine On

# If your Rails application is accessed via an Alias directive,

# then you MUST also set the RewriteBase in this htaccess file.

#

# Example:

# Alias /myrailsapp /path/to/myrailsapp/public

# RewriteBase /myrailsapp

RewriteBase /

RewriteRule ^$ index.html [QSA]

RewriteRule ^([^.]+)$ $1.html [QSA]

RewriteCond %{REQUEST_FILENAME} !-f

RewriteRule ^(.*)$ dispatch.fcgi [QSA,L]

# In case Rails experiences terminal errors

# Instead of displaying this message you can supply a file here which will be rendered instead

#

# Example:

# ErrorDocument 500 /500.html

ErrorDocument 500 "<h2>Application error</h2>Ruby application failed to start properly"

This file was taken, in large part, from the bluehost forum post linked at the bottom of the page.

Install the “dispatch.fcgi” file

In the same directory, I created the “dispatch.fcgi” file and put the following into that:

#!/usr/bin/ruby # # Sample dispatch.fcgi to make Sinatra work on Bluehost # # http://www.sinatrarb.com/ # require 'rubygems' # *** CONFIGURE HERE *** # You must put the gems on the path ENV["GEM_HOME"] = "/home#/XXXXX/ruby/gems" # sinatra should load now require 'sinatra' module Rack class Request def path_info @env["REDIRECT_URL"].to_s end def path_info=(s) @env["REDIRECT_URL"] = s.to_s end end end # Define your Sinatra application here class MyApp < Sinatra::Application get '/hi' do "Hello World!" end end builder = Rack::Builder.new do use Rack::ShowStatus use Rack::ShowExceptions map '/' do run MyApp.new end end Rack::Handler::FastCGI.run(builder)

You need to replace “/home#/XXXX” with the appropriate path in your system.

Correct all the date times in your gemspec files

At this point, most other sources said I should be done, but I kept getting an error along the lines of:

Invalid gemspec in [D:/RailsInstaller/Ruby1.8.7/lib/ruby/gems/1.8/specifications /tilt-1.3.3.gemspec]: invalid date format in specification: "2011-08-25 00:00:00 .000000000Z"

So what I had to do to fix it was go to “/ruby/gems/specifications/tilt-1.3.3.gemspec” in the file manager and change the line:

s.date = %q{2011-08-25 00:00:00 .000000000Z}

to

s.date = %q{2011-08-25}

If that wonky date format shows up on any other gemspecs, you’ll likely have to alter them as well.

Hopefully, this will get you up and running. It did for me anyway.

Sources

Everything I learned I learned from the following sites and posts: