One of the big questions we had to answer for our Scrum development process whether or not to story point bugs. Development wanted to story point bugs to help with sprint planning. The Product Owner (me) didn’t want to story point bugs to maintain an accurate velocity. We experimented with a few approaches and settled on one that works well for us. In this post I’ll run through the issues and solutions we came up with.

One of the big questions we had to answer for our Scrum development process whether or not to story point bugs. Development wanted to story point bugs to help with sprint planning. The Product Owner (me) didn’t want to story point bugs to maintain an accurate velocity. We experimented with a few approaches and settled on one that works well for us. In this post I’ll run through the issues and solutions we came up with.

Our Problems with Bugs in Scrum

Bugs pose a bit of a problem in Scrum because of how velocity and scheduling work. The measure of progress is the amount of value added to the product, and we use story points to estimate how much of that value we can fit into a release. This is tracked as “velocity.” For developers, velocity also acts as a benchmark for deciding how many stories to include in any given sprint.

This use of velocity is basic Agile practice and works pretty well for the most part. However, bugs are going to happen no matter how good your developers are. As a Product Owner, I evaluate the bugs that come in and decide if/when to fix them. From my point of view, the bugs are scheduled just like stories… the most important things get done first.

When we get into sprint planning, Development has to figure out how much to pull into the sprint, including both stories and bugs. This is a little tougher because bugs don’t have story points, so you have to “groom” each bug during sprint planning to get a sense of how much work it requires. Sprint planning gets much more painful when it also involves grooming. You might argue that there shouldn’t be enough bugs to make this a big problem, and I wouldn’t disagree. But we’re transitioning an existing project to use Scrum, so we have a number of bugs from the B.S. (before Scrum) times. Even for new projects, sometimes bad things happen to good people and you end up with more bugs then you’d like.

Development requested that we start story pointing bugs to make sprint planning a bit easier. There are a number of reasons why I strongly suggest you DON’T do that, but we’ll leave that for another day. So, short of story pointing bugs, how can we make dealing with bugs easier in Scrum?

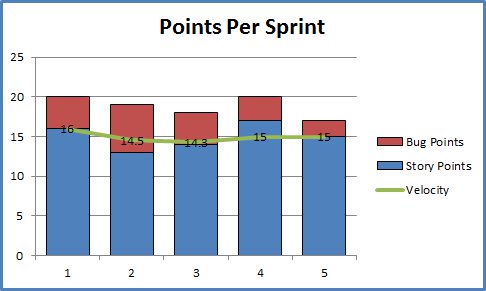

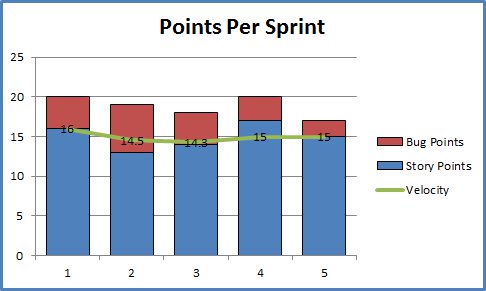

A Possible Solution: Bug Points

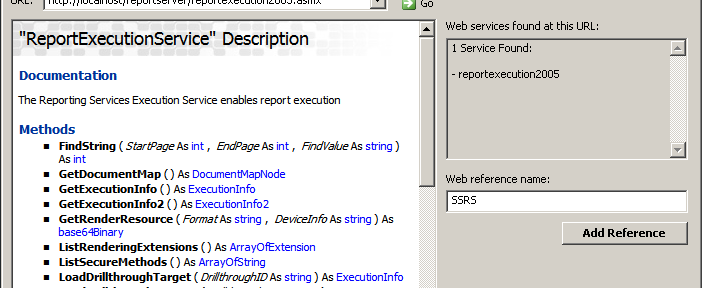

We experimented with a few solutions and settled on using both Story Points and Bug Points. A Bug Point is a Story Point, but only gets used for bugs and does not get counted in the velocity. We use the same scale as Story Points and assign them during our normal grooming sessions. Really the only difference is that we put them in a different field in JIRA (our issue tracker). Development also keeps track of “total work” for sprints, which includes both stories and bugs. However, this metric is NEVER used outside of sprint planning. At no point will I (the product owner) ever look at that metric and say “Well, our velocity is 15, but really we’re doing 20 points of total work, so I bet we can squeeze in these extra stories for the next release.”

When it comes to sprint planning, the team gets together and works out what can fit into the next sprint. The bugs in the backlog are prioritized along with the stories because some bugs need to be fixed now and others can wait for a while. Since bugs are also pointed, the team can easily look at the backlog and determine how far they can get. They don’t have to reevaluate each bug as it comes up in the backlog; they’ve already done that work and can just keep rolling.

This works well for us because it’s easier to know what’s going to fit in the sprint. It also allows the team to have a somewhat more realistic view of how much they can fit in a particular sprint. Note that I said a particular sprint, not a release. Remember: NEVER consider bug points during release planning! If the team has bugs in 4 straight sprints, then don’t have any they have a better idea of how much they can fit without any bugs to work on.

There are a number of other benefits. For one, you get a better sense of how much extra work you’re creating by letting bugs slip through the cracks when implementing a story. This helps to spot quality issues. If you’re constantly creating more bugs than value then you know something is wrong.

What’s Not So Good About This?

It’s very easy to fall into the trap of using bug points like story points outside of sprint planning. As I mentioned earlier, including bugs in your long term planning is a bad idea. Bug points are so similar to story points that it’ easy to forget that they AREN’T. Other than that, this seems like a pretty good solution to me.

It works for us, but how about you? I’d love to hear about how others are handling bugs in the backlog.